Last Updated on April 15, 2024 1:20 AM IST

Did you know in the world of artificial intelligence (AI), there is a cool yet developing method which is known as Chain of Thought Prompting?

This innovative method helps AI models use general knowledge and break down complex tasks step by step, ensuring clear and accurate results. In simple terms, it is like showing all the steps in a math word problem.

So, in this blog, we will look at what Chain of Thought Prompting is, how it works, its benefits, and its effectiveness in improving AI’s ability to solve complex problems. Let us begin.

What is the purpose of Chain-of-Thought Prompting and How Does it Work?

Chain of thought prompting techniques help guide AI models like ChatGPT to generate more coherent and connected responses by prompting related follow-up questions or ideas. This method enhances the flow of conversation, making interactions with AI more engaging and natural for users.

Chain of thought is a popular prompt engineering technique used to stimulate a continuous flow of ideas or concepts, encouraging creativity and problem-solving abilities.

To dive deeper into the various prompting techniques and best practices, be sure to check out our comprehensive prompt engineering cheat sheet.

By linking one thought to another, these techniques help in exploring various perspectives and uncovering innovative solutions to provide better results.

Chain of thought (CoT) prompting is a recent advancement in prompting techniques that encourages large language models (LLMs) to explain their reasoning process.

The following image describes two types of prompts to get answers from LLMs. The first image is a few-shot standard prompt, and the second image consists of a chain-of-thought prompt.

However, understanding the difference between them is important. The standard prompting will directly ask for an answer from the AI model like llama, while the chain-of-thought-prompting will guide the LLM to explain the logical steps, which leads to more accurate and interpretable results.

In the following section, we have shown the demos, which will help you understand how to use it.

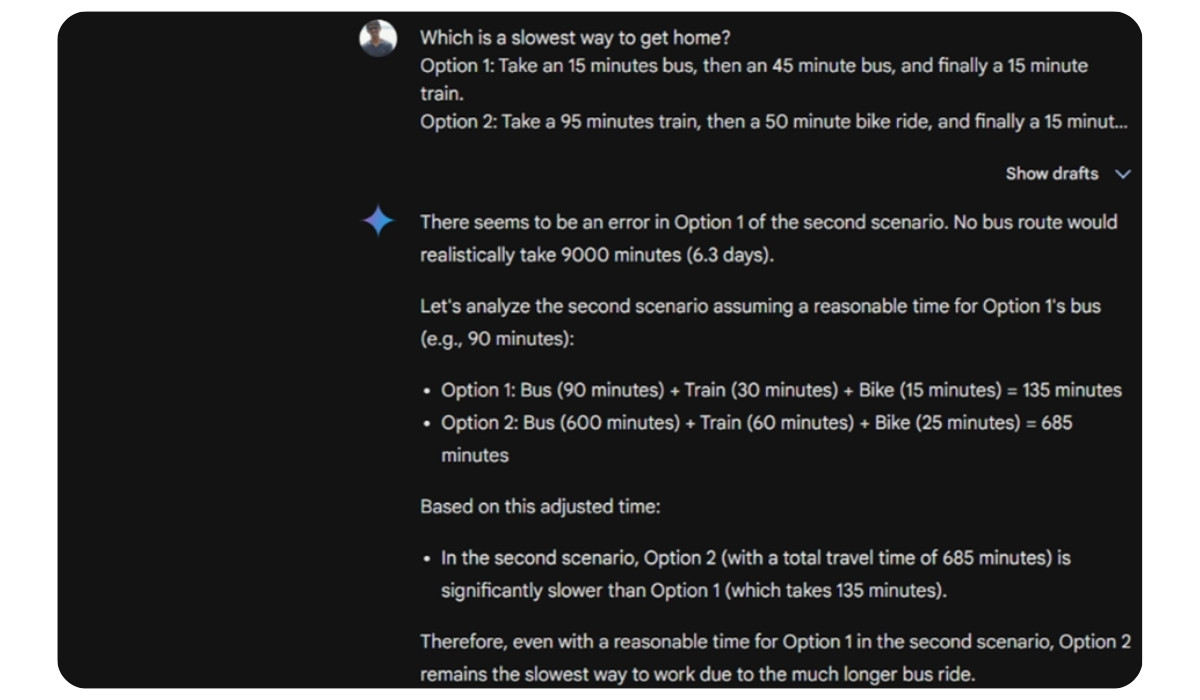

The first prompt will show how AI fails to solve a simple word problem. The second prompt shows how AI successfully solves the problem and displays the final answer by using it.

How does it Work?

Chain of Thought Prompting is very productive because it uses machine learning and LLM capabilities such as sophisticated ability.

It also uses successful techniques such as planning and sequential reasoning to mimic how humans think logically and plan step-by-step.

When we humans face any challenging problem, we break it down into common sense reasoning tasks and solve it easily. For example, to solve a complex math problem, we break it down into steps to get the right answer.

The baseline of Chain-of-thought prompting is the same. It is like asking a computer to do the same thing, but this time it will explain each step instead of just giving them an answer directly.

Below is a practical example of how chain-of-thought prompting works. You can ask ChatGPT or Gemini with a classic prompt by adding this phrase at the end of the prompt: “Describe your reasoning step by step.”.

“Ria has one pizza with 8 slices. She eats four slices, and her friend eats three. How many slices are left now? Explain your reasoning step by step.”

When it starts generating the response, it will subsequently go through the problem and get you a final solution.

Chain of Thought types

With the success, user-friendliness, and adaptability of Chain of thought prompting, many people have drawn inspiration from it and developed their variations since its launch. Now, let’s quickly look at some of the CoT exemplars.

Zero-Shot Chain of Thought

Zero-Shot Chain-of-Thought was introduced by Kojima and his team. In their recent paper, this prompt aims to address the burden of providing multiple examples for a large language model (LLM) to learn from.

The heuristics used in Zero-Shot-CoT help in generating reasoning chains for questions in a given dataset. By partitioning the questions into clusters and selecting representative questions from each group.

Zero-Shot-CoT with heuristics simplifies the process of creating coherent chains of thought. This method helps prompt a structured chain of thought, making it easier to navigate complex datasets and generate meaningful insights.

Zero-CoT is easy to implement, but it is not as effective as standard CoT. Here, you must inform AI to follow a series of intermediate steps such as “Let’s think step by step” to the prompt, encouraging LLMs to take more systematic steps and provide valuable insights.

Automatic Chain of Thought

Automatic Chain-of-Thought, or Auto-CoT, is a combination of zero-shot CoT along with standard CoT. It uses Zero-CoT for instructing and large language models (LLM) to generate question answers, which are then used as CoT prompts.

These prompts are organised into clusters to provide a diverse range of examples. Zhang and colleagues found that auto-CoT was better than standard CoT.

Benefits and Challenges of CoT Prompting

In the following section of the article let us now look at the benefits and challenges of chain-of-thought-prompting.

AI Transparency

Chain of thought prompting makes the reasoning more transparent, which helps the LLM understand and get the answer. It is crucial for developing trust and identifying potential errors

Better accuracy for LLM

The LLM is less likely to make mistakes or illogical conclusions if the reasoning steps are clear. This is especially helpful for tasks that require multi-step logic or complex reasoning.

Adaptability

The CoT technique can be used to perform serious tasks such as solving math problems, data interpretation, text summarization, and creative writing.

Better performance

Chain-of-thought is effective for tasks that require multi-step reasoning, logical deduction, or common-sense apps. These are the areas where previous LLMs usually struggled.

Transparency

CoT prompts help us understand how smart computers make decisions, which are a bit mysterious. But a chain of thought helps us understand how the computer has reached its answer.

This helps build trust in computers more and helps us determine if there are any biases or mistakes they might have made.

Precise reasoning

Chain of thought prompting reduces the risk of LLMs making incorrect conclusions or absurd runs by providing a clear pathway. CoT makes it easier to understand and work through complex tasks and tricky problems that were previously difficult for smart computers to solve.

Versatility

CoT is easy to understand. This technique is useful in various scenarios, including scientific analysis, creative writing, data summarization, textual interpretation, and much more.

Understanding complicated domains

CoT has sufficiently large language models LLMs to perform tasks that were previously exclusive to human reasoning, such as solving complex arithmetic problems or interpreting data-driven insights. This increases the range of applications in which LLMs can shine.

Challenges of CoT

Manual effort

To create effective CoT prompts, you must first understand the problem and then design the reasoning steps yourself. For complex tasks, this can take a long time.

Model size

CoT appears to be more effective in larger LLMs with stronger reasoning capabilities. Smaller models may struggle to follow the prompts or create their reasoning chains.

Bias Blind Spots

In any prompt, CoT is susceptible to biased information. To avoid a misleading path, the LLM must be carefully designed and thoroughly tested.

Prompt bias

Like any other prompting technique, CoT can be influenced by biased prompts, leading the LLM to incorrect results. Hence, careful design and testing are critical.

Crafting the Path

To create effective CoT prompts, you must first understand the complexities of the problem and then develop a clear, logical chain of reasoning. This can be challenging, particularly for complex tasks.

Applications and Use Cases

It has a variety of applications. It can solve arithmetic problems, such as solving arithmetic problems and explaining complex reasoning tasks.

It is highly beneficial in educational settings for solving complex problems. Additionally, it can be used in customer service bots, programming assistants, and everywhere where reasoning is critical.

Here are some key areas where chain-of-thought-prompting can be beneficial. They are as follows:

Scientific research

Research institutes or pharmaceutical companies can use CoT prompting techniques to analyse complex scientific data. This will help the AI model explain the reasoning behind the results.

This can help researchers identify patterns or potential breakthroughs that they might have missed.

Legal document review

Law firms can also use CoT to analyse legal documents. The AI can highlight the relevant sections and explain the reasoning for flagging them.

This can improve the overall efficiency and accuracy of legal reviews.

Customer service chatbots

CoT prompting can help improve the chatbot’s responses to customer queries. This will enhance trust and transparency while interacting with the customer.

Educational technology

Educational software companies can use CoT prompting to provide students with step-by-step explanations while solving problems.

This will enhance the overall learning experience by simply not giving just answers; it will also provide the thought process behind it.

However, it is important to note that CoT prompting is still developing, and companies may be testing it internally before it becomes widely adopted.

However, the potential benefits of improved reasoning and interpretability make it a desirable tool for a variety of industries.

Final words

Lastly, Chain of Thought (CoT) prompting enhances the performance of llms and improves their problem-solving abilities. It helps them think more accurately and clearly.

Although there are still some challenges to overcome, CoT represents a significant step forward in making these smart computers even smarter.

CoT prompts are a game changer in smart computer development. They enable these models to solve difficult problems more accurately, clearly, and flexibly.

However, the future of chain-of-thought (CoT) prompting looks promising, as researchers are continuously working to improve it.

By now, we hope you understand Chain-of-thought-prompting (CoT) and its significant advancement. As smart computer models get more advanced, we can expect them to provide clearer and more detailed explanations.

This means they’ll be able to think more like humans, which is pretty exciting! And for such amazing content stay connected with Appskite.

Frequently asked questions:

How does natural language processing (NLP) impact chain-of-thought prompting?

Yes, NLP is used for Chain of Thought (CoT) Prompting. It helps AI understand what you’re asking, then generates logical responses step by step and ensures that the answers make sense. Essentially, NLP keeps the entire CoT process running smoothly by handling language comprehension and response generation tasks.

To further explore the key benefits of NLP and its various use cases, check out our guide on natural language processing (NLP).What are the potential future developments or advancements in chain-of-thought prompting?

Experts are continuously working to improve CoT prompting. They’re continuously working on symbolic reasoning, handling more complex tasks, minimizing errors, and making the AI’s explanations more understandable. So we can expect CoT to become even smarter in the future!

Can Chain of Thought prompting be easily integrated into existing AI systems, or does it require significant modifications or specialised software?

Adding Chain of Thought (CoT) prompting to existing AI systems can be a bit tricky. It is like teaching the AI to explain its thought process, which might require additional modifications and software changes depending on the AI’s intended use.